StreamBED

UX and Product Design to explore Virtual Reality Multimodal Interactions.

TL;DR

Objective

The definition of a streambed is:

“the channel occupied or formally occupied by a stream”

Environmental citizen science frequently relies on results based through experiences, but whenever we consider volunteers to undergo such a process. We notice that they don’t have to adequate training in interacting with natural elements and assessing them.

Our objective is to analyze, design and develop a system where we can incorporate a way to train behavior in such environments

.

Approach

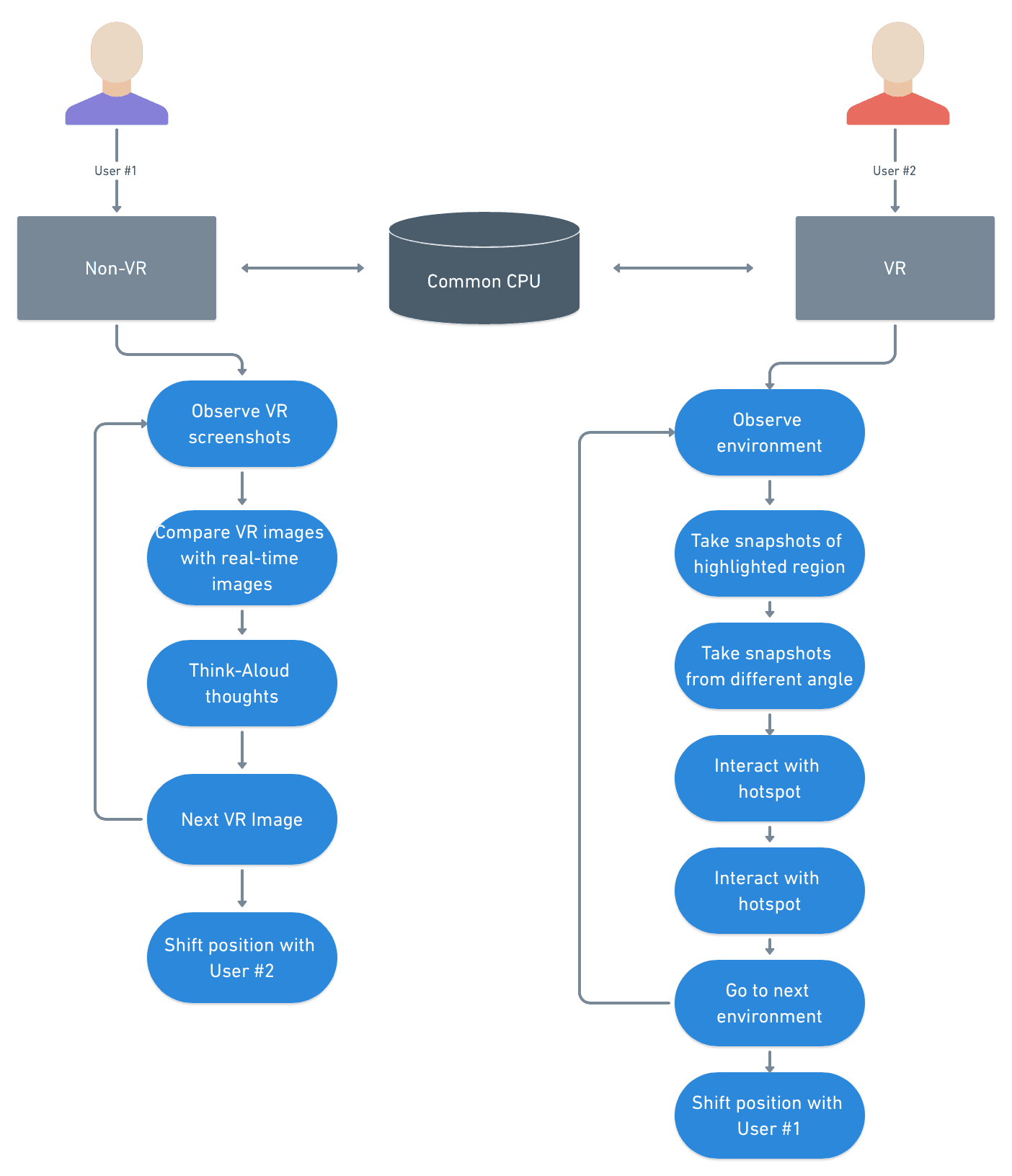

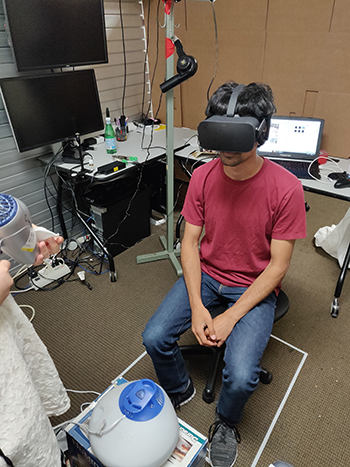

The final product will be Virtual Reality simulations on Unity consisting of 360 videos taken on the Gear360 previously. There will be a user on the Oculus, where they will be interacting with natural elements i.e describing each element alongwith taking screenshots of the same. A second user will be on a non-VR platform and will be observing the screenshots with real pictures to scrutinize the relevance between the two. The two users will then shift positions and undergo the same procedure again.

This approach will aid in development of behavioral patterns where users will be able to observe certain intrinsic elements in natural environments and give feedback on the same.

My Role

I initially joined the team with the view of learning about Virtual Reality, I ended up with the task of development of the platform on VR. For this to come into fruition, I’ve started to learn Unity and have gained an above beginner level proficiency. My role has an emphasis on promoting good user experience through the Virtual Reality platform.

Tools Used:

Unity, Sketch, Sumerian, Hand-Sketch

Team:

- Alina Striner – Principal Researcher + Team Lead

- Parv Rustogi – Researcher + Hardware Guru

- Sharan Hegde – VR UX Designer

- Pranav K – Software Developer

- Connor Petrelle – Software Developer

What I learned

Brainstorming + Ideation

Environmental Citizen Science frequently relies on experience-based assessment, while the volunteers don’t have the sufficient training in understanding the environment. So hence, we started brainstorming ideas that can provide support. We designed a solution where volunteers will enter a virtual reality that helps promote analysis of user behavior when immersed in such a system.

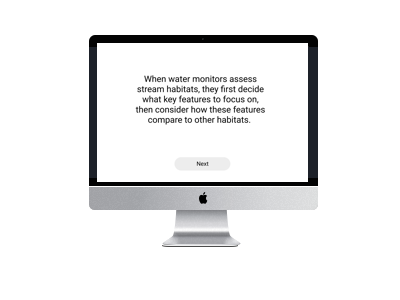

Thus, we decided to develop a Virtual Reality simulation for the volunteers to immerse themselves within a nature environment. To further understand the difference between the two environments, a desktop application will be designed, where users will comparing images of the two environemnts.

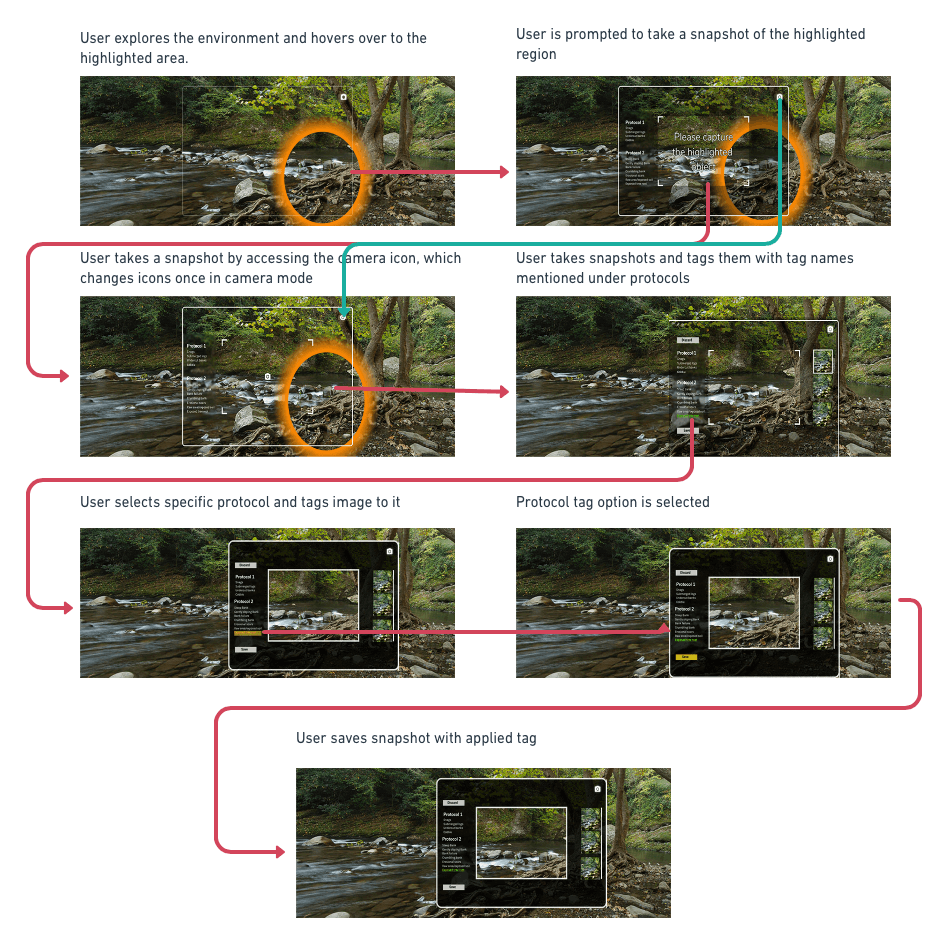

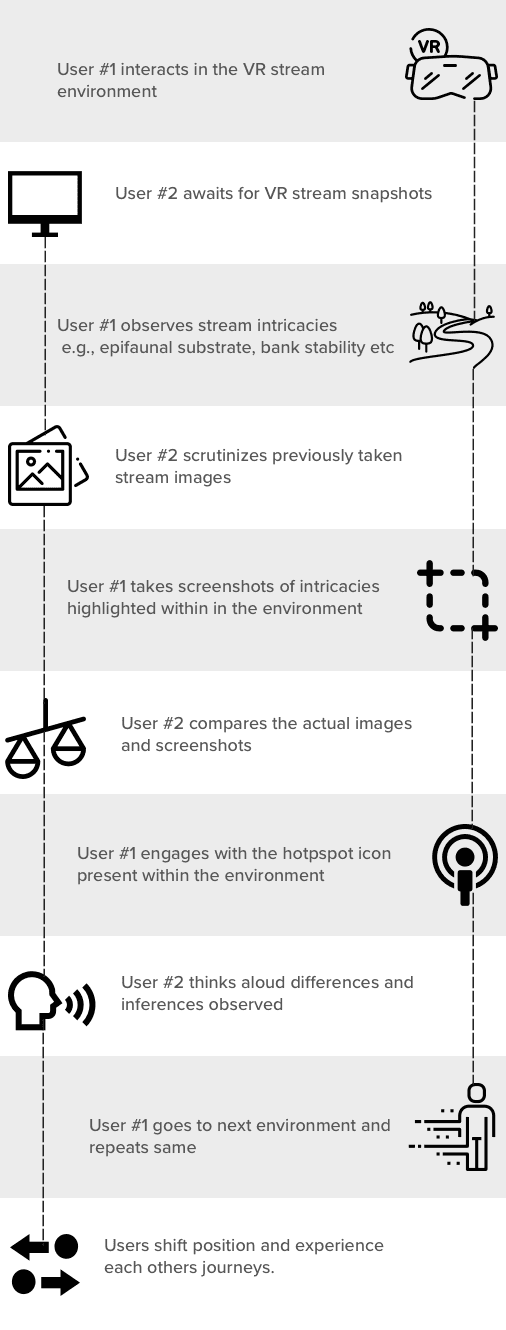

Planned User Journey

Workflow

The process required 2 users working coherently, User 1 will be on the Non-VR platform analyzing (User 1 will be thinking aloud and verbally state their insights ) screenshots taken by User 2 on the VR platform (User 2 will also be thinking aloud and verbally explain the VR experience) . This process will continue till User 2 reaches the end of this experience within the Virtual Reality. Upon completion, User 1 and User 2 will swap positions and undergo the entire routine again but in each other’s previous experiences.

Question 1:

Why do we need two users to do this task, isn’t one enough to analyze the experience ?

Answer:

Using the think-aloud protocol, users are able to work well-together with constant engagement of each other’s activity, expressing intuition verbally helps users develop explicit connections between ideas, and begets further insights

Question 2:

Why do they have swap places?

Answer:

In order to allow deeper insights that may have been missed previously.

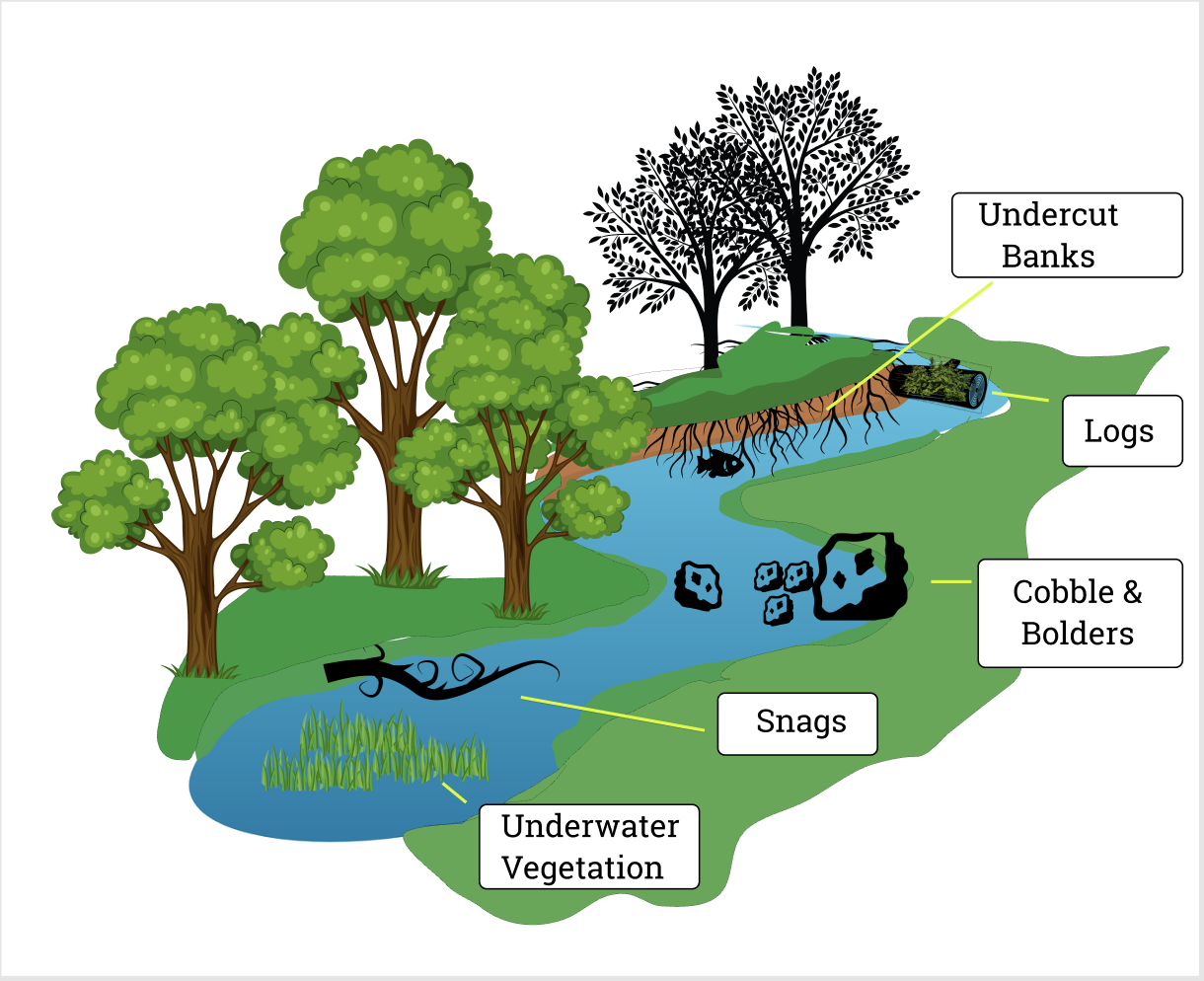

How do we assess the streams?

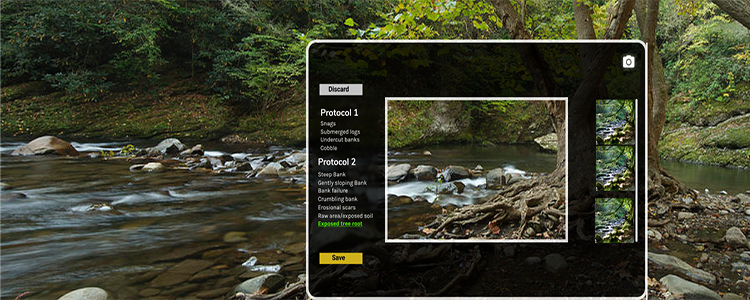

EPA’s Rapid Bioassessment Protocols has 13 measures which are used to qualitatively assess stream bodies

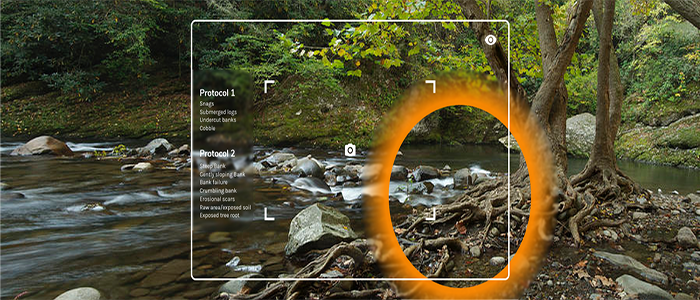

We decided to focus on 3 primary protocols which were:

Epifaunal Substrate

Bank Stability

Sediment Deposition

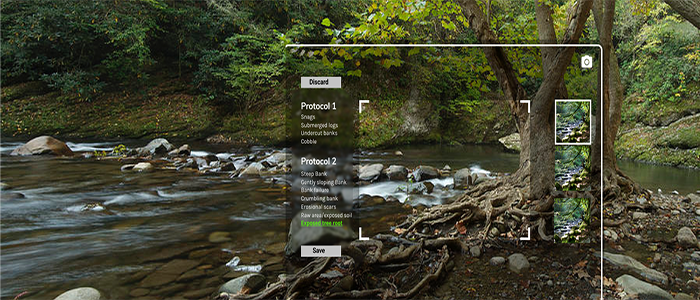

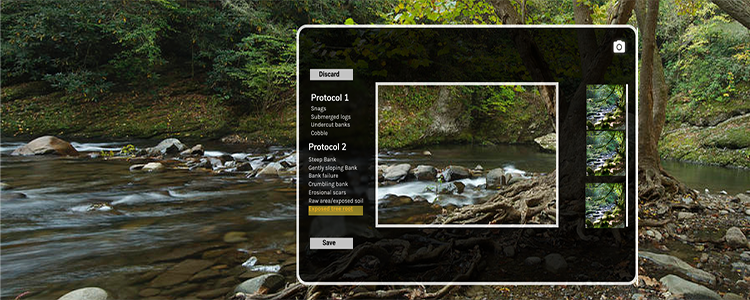

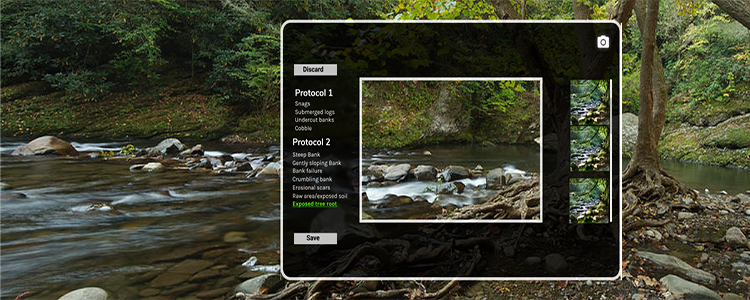

Example Environments which the user will be immersed in along with key variables to identify

Multimodal Interactions

Multisensory Interactions leave behind positive learning, impact judgement and learning tasks. Also it helps reduce conceptual load, create salient memories and emotional experiences, and increase memory and sense of presence

For this project, the following senses will be targeted to immerse the user within the experience,

- Vision (See)

- Audition (Hear)

- Olfaction (Smell)

- Tactition (Touch)

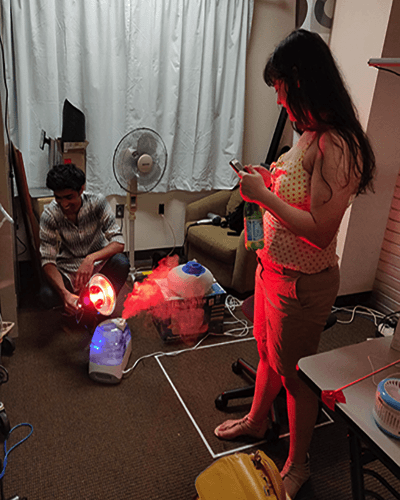

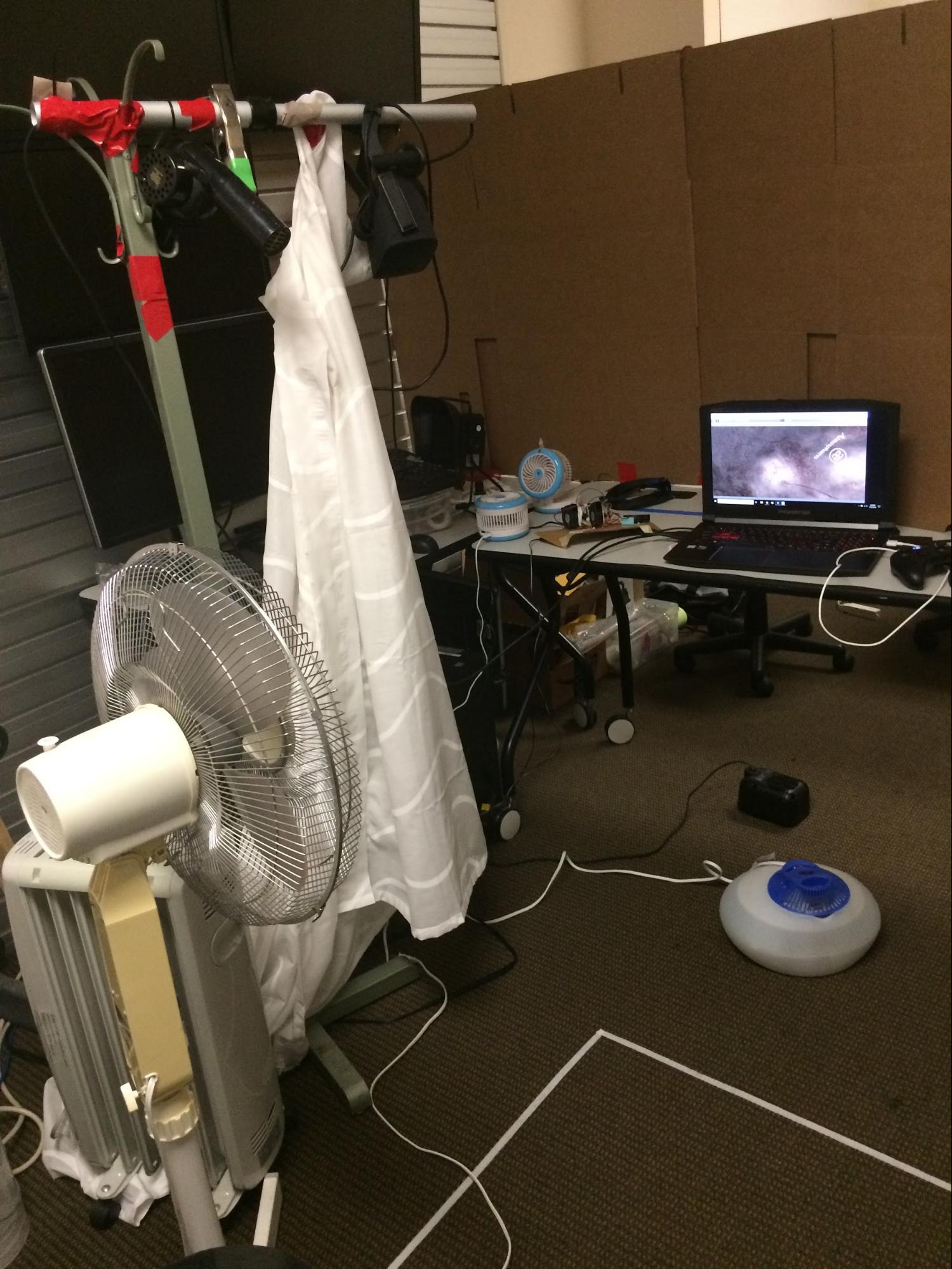

To enhance these senses, the following external material/devices were used

- Heat Lamps

- Infrared Lights

- Scents

- Arduino based Power Motor

- Oculus Rift

- Humidifiers

- Battery powered Fans

User Studies

b

#Oculus Rift

#Fans for Tactition

#Motors for Olfaction

To validate whether we can train Citizen scientists to qualitatively assess streams within a virtual environment, we set-up a test run in two different stream climates to assess the acute differences noticed by the users

#Setting up the Environment

#External Environment

The screenshots below show 2 stream for different climatic conditions, For Stream 1, the conditions included were windy and cool, sound of red deer, water bats and otters, and smell like thistle, wisteria, and limestone. In contrast, Stream 2 would have high heat and humidity, sound of cicadas, howler monkeys, and Poison dart frogs, and smell like decomposed wood, soil, and bananas.

Participants will experience videos either with or without these multisensory cues. Participants in the multisensory cue condition will experience information through a series of Arduino controlled diffusers, a mister and fan, and a mini desk space heater

#Example VR Scene 1

#Example VR Scene 2

During assessments, Users had to navigate different parts of the virtual stream to make accurate assessments, but it took time for them to associate their insights with specific features of the environment. It is not surprising that participants had high error rates given the learning curve

#User 1 immersed in VR

#User 2 pre-VR tests

#User 3 interacting within the system

Challenges Faced:

-

Scent Overlapping: While, it is easier to deliver the scent within the system, but to deliver the right scent as per the environment and to prevent scent-overlapping is hard to facilitate.

-

Multisensory Information: Cross-model stimuli is inherent for users when it comes to integrating and synthesizing information. Hence, we had to cue the user with questions of stimulus reaction

-

Unity Inexperience: Inexperience in Unity led to complications in shifting between the environment systems

Low-Fidility Wireframing and Testing

High-Fidelity Designs

Desktop (Non VR) Wireframes:

VR Wireframes:

User Flows